Sub-millisecond Response

Process data at the source for instant decisions without network delays or cloud latency.

Bring AI processing closer to your data. Brane platforms deliver intelligent processing directly at the edge—beside sensors, manufacturing lines, and critical systems—for faster decisions, predictable costs, and complete data control.

Process data at the source for instant decisions without network delays or cloud latency.

Purpose-built accelerators deliver maximum throughput within tight power and thermal budgets.

Local processing ensures continuous operation during network outages or connectivity issues.

Keep sensitive information secure on-premises with predictable TCO and no cloud dependencies.

Delivering real AI at the edge takes more than raw hardware. We combine carefully selected accelerators, full-system integration, and trusted partnerships into platforms that are validated, supported, and ready to deploy—so you can focus on applications, not infrastructure.

CPUs, GPUs, DPUs, and FPGAs chosen for performance per watt and workload fit. Every component validated for edge deployment.

Integrated systems validated through burn-in testing and thermal profiling. Ships ready to run from day one.

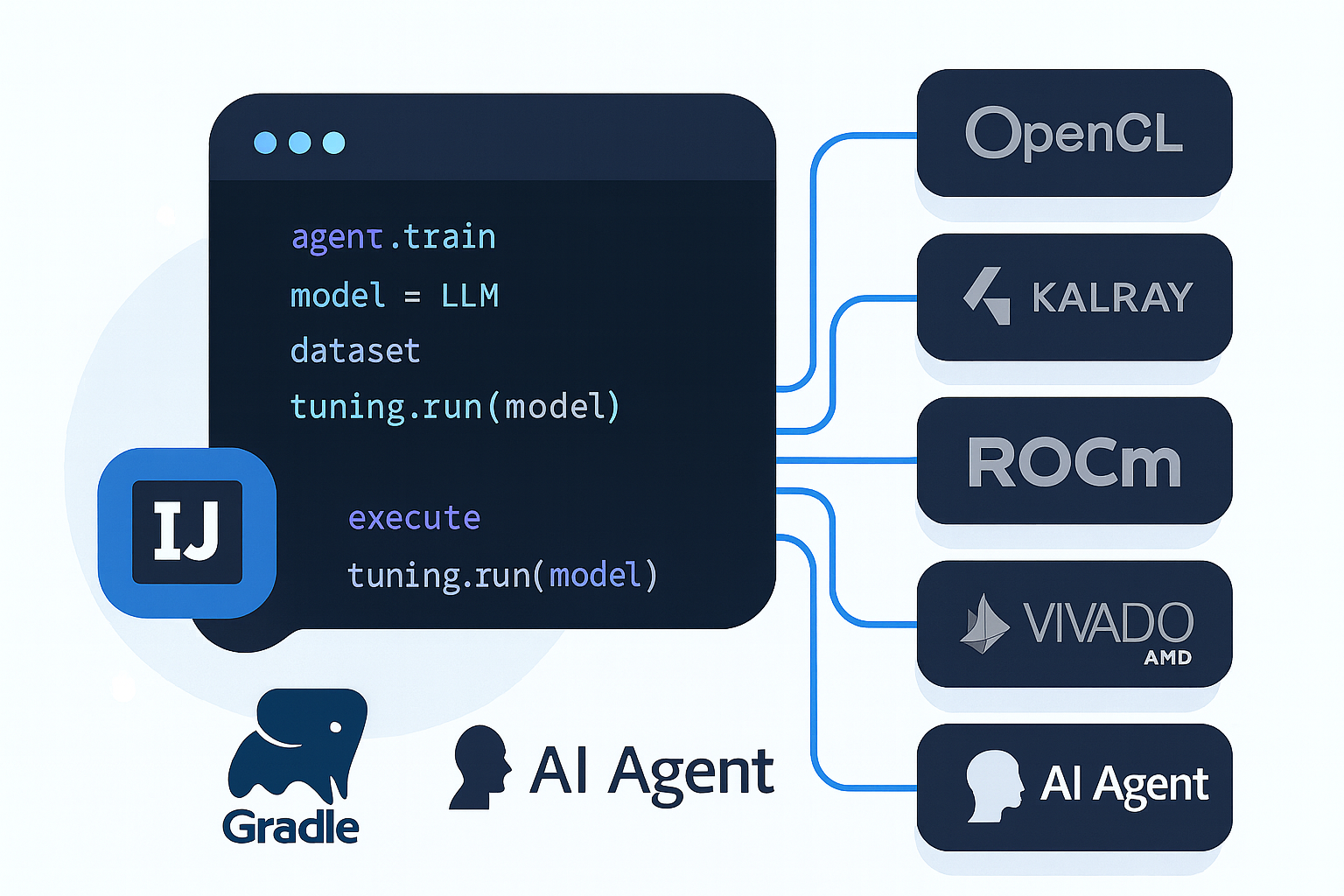

AccelOne SDK provides unified development across all processors. Build, debug, and deploy without complex toolchain setup.

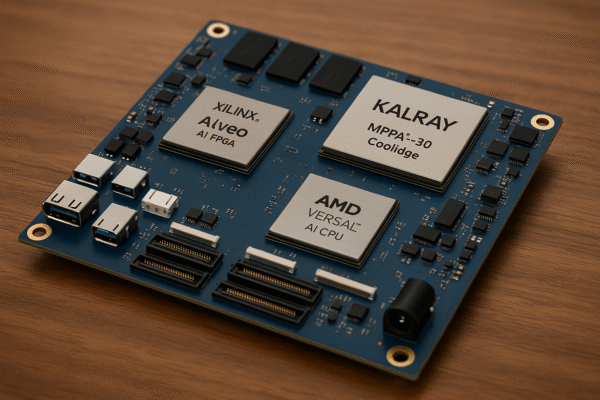

Direct collaboration with AMD, Kalray, and Xilinx ensures long-term support and access to latest innovations.

Quiet acoustics, predictable power consumption, and reliable thermals designed for office and field environments.

Direct access to Brane experts for integration assistance, performance tuning, and proof-of-value projects.

Strategic partnerships with AMD and Kalray, plus trusted providers such as Exxact, ensure our platforms are supported, reliable, and future-proof for production deployments.

High-throughput deskside system for training and on-prem serving without datacenter complexity.

Office-ready compute with predictable power for agentic workflows and local inference.

Edge AI solution that processes data on the field for instant decisions and reliable operation.

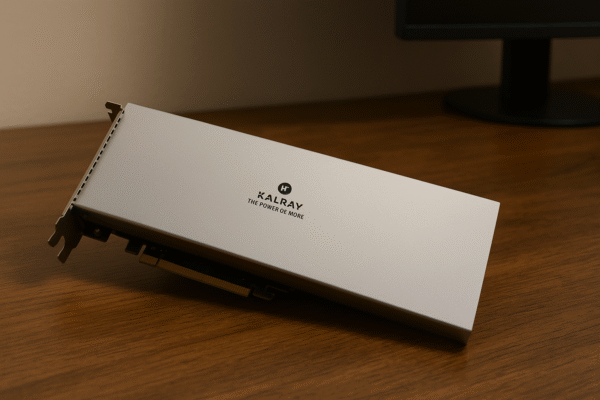

Many-core DPU for streaming data and pipeline acceleration with deterministic performance.

Unified development environment for multi-accelerator systems with integrated toolchains.

This is what’s running today. Together with AMD and Kalray, we’ve deployed open-source LLMs, Ollama stacks, and agentic pipelines on Brane workstations — then pushed the same containers to Brane Boards for field use.

Datacenter-class throughput in a workstation form factor, used for daily development and operations.

The same containers move to Brane Boards with no toolchain drift — deterministic latency and predictable power at the edge.

Access detailed documentation to install, configure, and optimize your Brane-powered workstation and SDK stack — from system setup to advanced accelerator tuning.

Build faster with sample projects, API references, and real-world examples for programming across heterogeneous platforms — including AMD ROCm, Xilinx, Kalray MPPA, and more.

Have questions or custom requirements? Our engineering team offers expert support for Brane systems — from setup to deep integration across your compute stack.